정의

Given a list of messages describing a conversation, the model will return a response.

Create chat completion

POST https://api.openai.com/v1/chat/completions

Creates a model response for the given chat conversation.

Request body

| model (string / 필수) | ID of the model to use. |

| messages (array / 필수) | A list of messages describing the conversation so far. |

| role (string / 필수) | The role of the author of this message. One of system, user, or assistant. |

| content (string / 필수) | The contents of the message. |

| name (string / 옵션) | The name of the author of this message. May contain a-z, A-Z, 0-0, and unserscores, with a maximum length of 64 characters. |

| temperature (number / 옵션) | What sampling temperature to use, between 0 and 2. Higher values like 0.8 will make the outputmore random, while lower values like 0.2 will make it more focused and deterministic. |

| top_p (number / 옵션) | An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10% probability mass are considered. |

| n (integer / 옵션) | How many chat completion choices to generate for each input message. |

| stream (boolean / 옵션) | If set, partial message deltas will be sent, like in ChatGPT. Tokens will be sent as data-only server-sent events as they become available, with the stream terminated by a data : [DONE] message. |

| stop (string or array / 옵션) | Up to 4 sequences where the API will stop generating further tokens. |

| max_tokens (integer / 옵션) | The maximum number of tokens to generate in the chat completion. The total length of input tokens and generated tokens is limited by the model’s context length. |

| presence_penalty (number / 옵션) | Number between -2.0 and 2.0. Positive values penalize new tokens based on whether they appear in the text so far, increasing the model’s likelihood to talk about new topics. |

| frequency_penalty (number / 옵션) | Number between -2.0 and 2.0. Positive values penalize new tokens based on their existing frequency in the text so far, decreasing the model’s likelihood to repeat the same line verbatim. |

| logit_bias (map / 옵션) | Modify the likelihood of specified tokens appearing in the completion. Accepts a json object that maps tokens (specified by their token ID in the tokenizer) to an associated bias value from -100 to 100. Mathematically, the bias is added to the logits generated by the model prior to sampling. The exact effect will vary per model, but values between -1 and 1 should decrease or increase likelihood of selection; values like -100 or 100 should result in a ban or exclusive selection of the relevant token. |

| user (string / 옵션) | A unique identifier representing your end-user, which can help OpenAI to monitor and detect abuse. |

Example request

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "Hello!"}]

}'

Parameters

{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "Hello!"}]

}

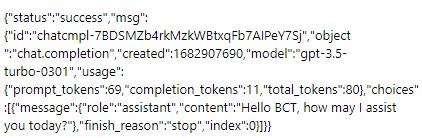

Response

{

"id": "chatcmpl-123",

"object": "chat.completion",

"created": 1677652288,

"choices": [{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\nHello there, how may I assist you today?",

},

"finish_reason": "stop"

}],

"usage": {

"prompt_tokens": 9,

"completion_tokens": 12,

"total_tokens": 21

}

}

호출 결과